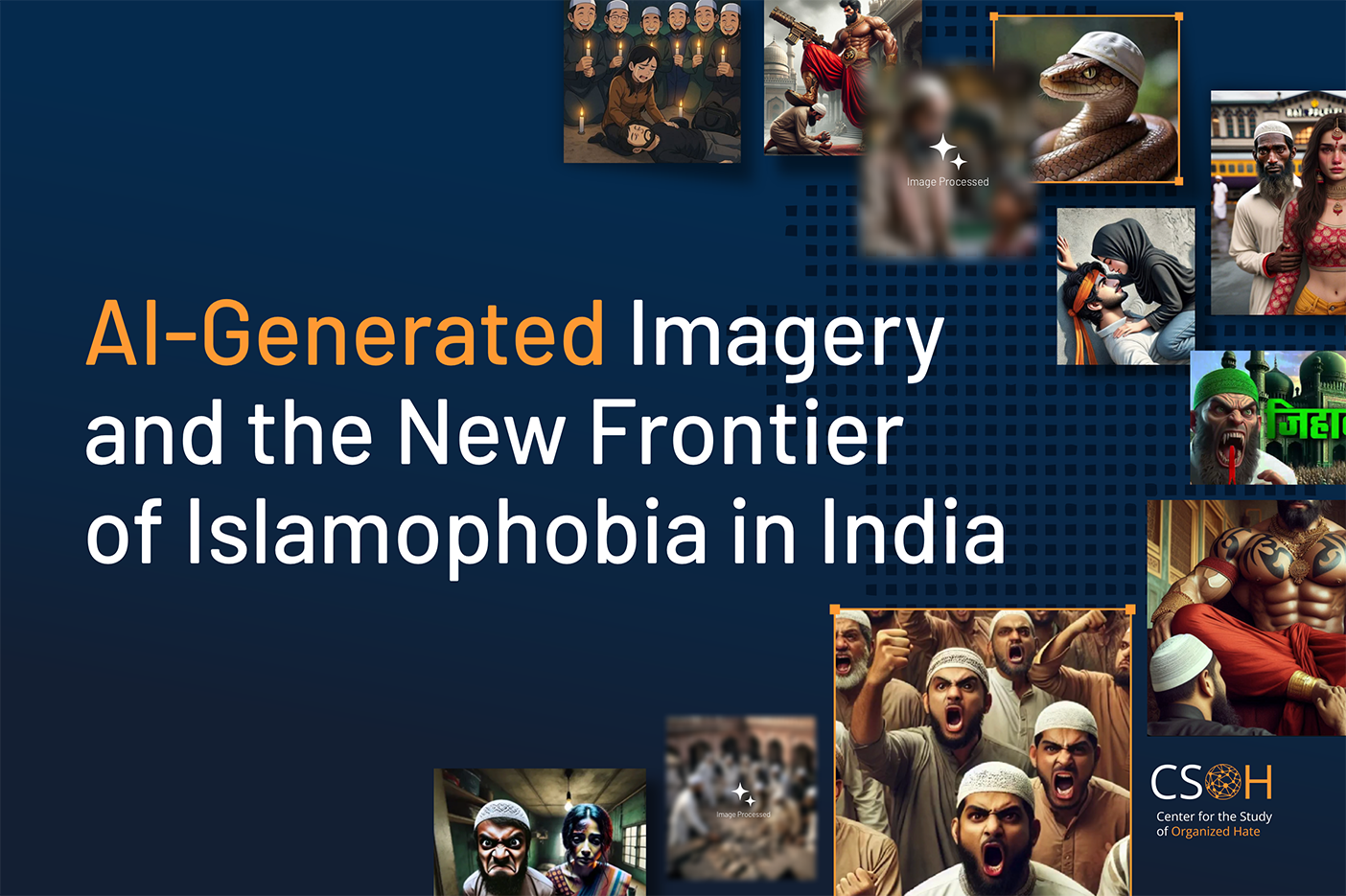

Washington, D.C. (September 29, 2025) — The Center for the Study of Organized Hate (CSOH) today released a new study documenting how generative AI tools are being weaponized to produce and spread anti-Muslim visual hate at scale across social media platforms in India.

The report examines activity from 297 Hindu nationalist accounts with a documented history of targeting religious minorities on X (formerly Twitter), Instagram, and Facebook between May 2023 and May 2025. Within this dataset, researchers identified 1,326 AI-generated harmful posts directed at Muslims. While activity remained minimal in 2023, the use of AI-generated content rose sharply from mid-2024 onward, coinciding with the rapid adoption of generative AI tools in India. Instagram emerged as the most powerful amplifier, driving 1.8 million interactions (a sum of likes, comments, and shares) across 462 posts, compared with 772,400 on X from 509 posts and 143,200 on Facebook from 355 posts.

The study found that sexualized depictions of Muslim women drew the highest engagement, with 6.7 million interactions, highlighting the gendered nature of Islamophobia where misogyny and anti-Muslim hate converge. The research identifies four dominant narrative patterns: the sexualization of Muslim women; exclusionary and dehumanizing rhetoric; conspiratorial narratives such as “Love Jihad,” “Population Jihad,” and “Rail Jihad”; and the aestheticization of violence, including the use of stylized or animated imagery that renders brutality palatable to broader audiences.

Hindu nationalist media outlets, including OpIndia, Sudarshan News, and Panchjanya, played a central role in producing and amplifying this synthetic hate, embedding AI-generated Islamophobia into mainstream discourse.

Across X, Facebook, and Instagram, we reported 187 posts for violating community guidelines. Of these, only one was removed on X, a striking demonstration of the platforms’ failure to enforce their own policies and address the rising tide of AI-generated hate.

The report makes multiple recommendations including AI companies embed safety and transparency by default, regulators and platforms support independent watchdog consortia, and cross-industry collaborations be established to detect and disrupt synthetic hate campaigns.

The full report is available for download here

For media inquiries or to request interviews with the report authors, please contact: [email protected].