Introduction

This report documents the use of generative Artificial Intelligence (AI) to produce and disseminate anti-Muslim visual hate content in India. The use of AI-generated images exploded globally in 2022, drawing wide interest and enthusiasm for their many potential uses. However, their role in shaping online hate speech remains underexplored. This is especially true for India’s volatile digital and political landscape. While deployment of social media for spreading hate in the Indian context has received significant attention and has been flagged through numerous studies, reports, and journalistic articles, including those produced by the Center for the Study of Organized Hate (CSOH), the deployment of harmful AI-generated content has not yet been analyzed. As ChatGPT seeks to access the potentially vast Indian market by offering an inexpensive subscription plan priced at less than $5 a month, the need for such analysis becomes especially paramount. This report offers an early and urgent intervention into the ways AI tools such as Midjourney, Stable Diffusion, and DALL·E are being used to generate synthetic images that fuel hate and disinformation against India’s Muslims. By examining AI-generated images that dehumanize, sexualize, criminalize, or incite violence against Muslims, the report documents how emerging technologies are being weaponized.

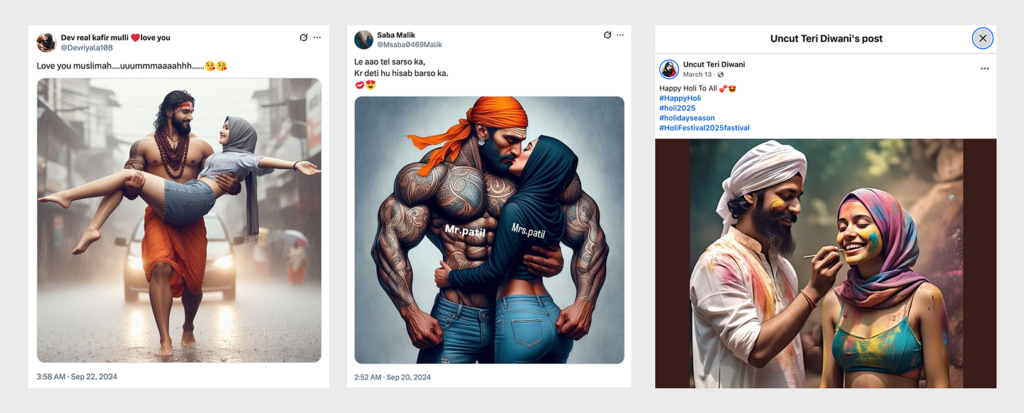

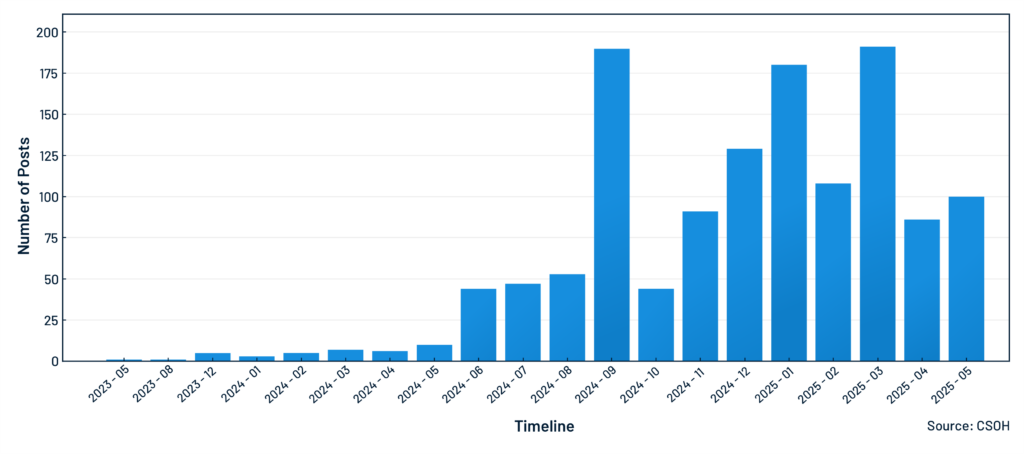

The dataset analyzed for this report comprises 1,326 publicly available AI-generated images and videos retrieved from 297 public accounts in Hindi and English across X (formerly Twitter), Facebook, and Instagram between May 2023 and May 2025. Within this time frame, the posts are concentrated from January 2024 to April 2025. The use of AI-generated content focused on spreading hate spikes after January 2024. These 297 public accounts were first selected through purposive sampling, as detailed in the methodology section below. They reflect extremist right-wing voices in the Indian internet space, with an active and consistent record of posting hateful content online, significant follower counts, and high engagement for their posts. The 1,326 posts were identified from the 297 accounts through qualitative coding. Each post was manually verified. The archive was then analyzed for thematic and narrative patterns through coding, through which key categories, subcategories, and tropes of anti-Muslim hateful content were identified. The analysis revealed four main categories across the archive: the sexualization of Muslim women, exclusionary and dehumanizing rhetoric, conspiratorial narratives, and the aestheticization of violence. The report also tracked how such posts were amplified across platforms by Hindu right-wing media outlets and networks.

We have focused on the impact of the content produced by this group of accounts over a period of two years. We wish to emphasize that a relatively small number of accounts have created and amplified a significant volume of hateful speech in a limited time period, encompassing numerous themes and utilizing various strategies to avoid detection. Consequently, the widespread adoption of ChatGPT in the Indian context may well result in an explosion of such content with grave implications for India’s religious minorities, including threats, psychological harm, and physical violence. The large-scale dissemination of hateful content also carries serious risks of damaging social relations between groups, undermining the principles of constitutional secularism, and attenuating both democratic institutions and the spirit of democracy in Indian society and culture. The proliferation of hateful AI-generated content threatens to further colonize the Indian information sphere, which is already marked by rampant misinformation, anti-minority bias, and a severe crisis of credibility.

The structure of the report unfolds as follows. First, we discuss the global and national context in which AI-generated hate content circulates. Next, we detail our research methodology, including processes of data collection and verification. Following a summary of key findings, the core sections present an analysis of dominant narrative trends such as conspiratorial, exclusionary, sexualized, and aestheticized content. This is followed by a semiotic analysis of the images and an examination of the Hindu far-right media’s role in amplifying the images. The report concludes with a reflection on the broader implications of AI-enabled hate production in India and a set of recommendations for stakeholders.

Hateful Use of AI-Generated Imagery

AI-generated images are pictures produced entirely by software rather than cameras. A generative model, typically a diffusion system like Stable Diffusion or DALL·E, takes a text prompt, starts with random noise, and iteratively “denoises” it by applying patterns it has learned from billions of training photos until a brand-new image that has never existed before appears, often in just a few seconds, on-screen on consumer hardware like a laptop or cellphone. Google’s psychedelic “DeepDream” experiments briefly captivated the internet in 2015, but AI-generated images only became a mass phenomenon after mid-2022. Midjourney opened its Discord beta on July 12, 2022. Stable Diffusion’s open-source model was released on August 22, 2022, and amassed roughly 10 million users within two months. OpenAI lifted the DALL·E 2 wait-list on September 28, 2022, at which point 1.5 million people were already creating more than two million pictures daily.

As a country with an estimated 900 million internet users, Indians have played an important role in engaging with and shaping the landscape of AI-generated imagery. A survey by LocalCircles found that nine percent of Indian AI-platform users, numbering about 22 million, employ tools “for creating or enhancing pictures.” While much of this use is innocuous and routine, everyday activity, a growing climate of hate, intolerance, and violence in the country means that AI image generation is being used to target religious minorities, particularly Muslims, Christians, and other marginalized Indian groups like caste-oppressed communities.

This report examines the state of AI-generated hateful visual content in India. We use the term “AI-generated hateful content” to cover all images and videos produced with generative AI tools that (1) target a protected group with negative stereotyping, dehumanization, or incitement to harm, and (2) are disseminated with the reasonably foreseeable effect of amplifying hostility against a protected group. Protected groups in this context refer to communities historically vulnerable to structural discrimination and violence, such as those marginalized by religion, caste, gender, or ethnicity. In the Indian context, these groups include religious minorities, such as Muslims, Christians, and Sikhs, as well as caste-oppressed groups such as Dalits. Although we note international parallels in the patterns analyzed in the report, the empirical analysis undertaken in the report centers on posts related to the Indian context.

The rapid advancement of Artificial Intelligence (AI) text-to-image technology has introduced a new set of challenges for the global landscape of disinformation and extremism. AI text-to-image generation has made creating photorealistic disinformation cheap, fast, and deployable against specific targets. The Royal United Services Institute’s June 2025 report, Online Hate Speech and Discrimination in the Age of AI, shows that generative AI has become a force multiplier for online hate, allowing extremist actors to produce synthetic visuals that align neatly with existing prejudices.

Far-right parties and actors across Western Europe are increasingly using text-to-image technology to fuel fear-based narratives. After the 2024 Southport stabbings in the U.K., which involved a teenager killing three young girls, AI-generated images were used to stoke unrest and spread Islamophobic and anti-immigrant hate online based on false claims about the attacker’s identity. In April 2025, Italy’s opposition parties filed a complaint against Deputy Prime Minister Matteo Salvini’s League party for distributing AI-generated images depicting men of color assaulting women or police, based on spurious connections with immigration crime reports. The vilification of people of color and immigrants is a persistent trope in the increasing use of AI-generated imagery by the global far-right. A European Digital Media Observatory (EDMO) investigation found that across Europe, far-right parties and influencers from Germany’s Alternative für Deutschland (AfD) to Poland’s Sovereign Poland to France’s Reconquest, Italy’s League and Ireland’s The Irish People have used AI-generated images and videos that juxtapose “ideal” and idyllic white communities with ominous-looking and threatening dark-skinned migrants to stoke xenophobic fears.

A key dynamic underpinning this development is the rise of a phenomenon called slopaganda, that is, the deliberate use of cheap, abundant, and low veracity synthetic content to seed hate across digital spaces. Such AI slop proliferates in significant measure because generative tools make the creation and sharing of such content easy, allowing its producers to flood platforms and crowd out other content. These tools enable producers of slopaganda to game the algorithmic principles that are key to the major platforms. Far-right organizations across the globe also use these tactics to increase the visibility of extremist content. They are adept at using popular filters and trends, often utilizing a commonly recognizable aesthetic language to boost the popularity of such content.

Along with xenophobic propaganda, AI-generated imagery has been harnessed to fuel misogynistic hate. In Australia, eSafety Commissioner Julie Inman Grant flagged a sharp rise in AI-generated sexual deepfakes circulating among students. She urged schools to mandatorily report such incidents under new state laws, with the threat of potential fines up to $49.5 million per breach. A survey of over 16,000 respondents across ten countries found that 2.2 percent of the survey participants had been victimized by deepfake pornography and 1.8 percent admitted to circulating such content, revealing that existing non-consensual imagery laws are insufficient deterrents to the problem. Indeed, the combination of different kinds of prejudice in hateful speech is a common feature of much hateful content generated through AI tools.

Anti-Muslim Hate in India

Global trends related to the misuse of AI-generated imagery are alarming enough, but India presents a particularly worrisome case of the phenomenon. Over the past decade, anti-Muslim sentiment in India has strengthened and is increasingly visible across mainstream political discourse, media narratives, and digital platforms. Anti-Muslim sentiments have manifested themselves in various forms, including targeted sectarian violence, mob lynchings, inflammatory hate speeches, forced evictions of Muslims, destruction of Muslim properties and places of worship, economic exclusion and marginalization, and the normalization of conspiratorial rhetoric that depicts Muslims as outsiders, security threats, or a demographic danger to the Indian nation.

India possesses one of the world’s largest and most active digital ecosystems. This vast digital infrastructure enables content to be produced, shared, and consumed at unprecedented speed and volume across multiple platforms and in multiple languages. A deeply troubling aspect of the Indian online ecosystem is the use of digital tools and platforms to attack historically marginalized communities, such as Muslims, Christians, Dalits, and indigenous groups, as well as journalists, academics, and civil society actors. Though reflective of a global trend, the abuse of digital tools has taken on radically dangerous proportions in India.

A report by the Center for the Study of Organized Hate (CSOH), published in February 2025, showed that, of the 1,165 hate speech events CSOH researchers had recorded in 2024, as many as 995 were first shared or live-streamed on social media platforms. Another CSOH report exposed how cow vigilante groups were using Instagram to organize, fundraise, incite, and glorify violence against Muslims. YouTube has similarly been used to host ‘Hindutva pop’ music that glorifies extremism and targets Muslims. Social media platforms have played a significant role in the propagation and normalization of hate speech and disinformation against Muslims. In 2021, a Meta employee and whistle-blower Frances Haugen revealed compelling evidence that the organization was aware of the fact that users in India were being flooded with hateful propaganda and disinformation against Muslims, which was instrumental in fueling hate and violence against the community.Given this situation, AI‑generated hate content and disinformation have found fertile ground in the country, routinely targeting India’s approximately 200 million Muslim population. This report focuses on one specific facet of this phenomenon: the deployment of generative AI to produce and spread anti-Muslim visual hate content online.

Key Findings

- Generative Artificial Intelligence (GAI) tools are starting to play a central role in the production of anti-Muslim hate in India, allowing widely accessible technology to generate and amplify Islamophobic narratives at an unprecedented scale.

- We identified 1,326 AI-generated hateful posts targeting Muslims between May 2023 and May 2025 from 297 accounts across X, Instagram, and Facebook that were specifically selected for this study. Activity was minimal in 2023 and early 2024 but rose sharply mid-2024 onward, reflecting the growing popularity of and easier access to AI tools that accelerated the rapid creation of hateful content.

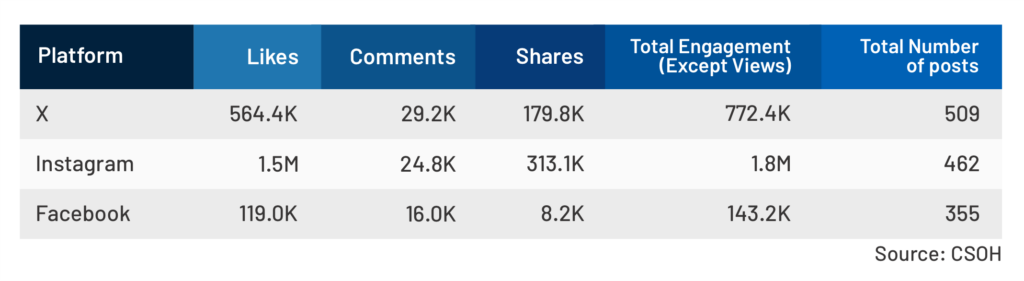

- Instagram drove the highest engagement (a sum of likes, comments, and shares) with 1.8M interactions across 462 posts, compared to 772.4K on X (509 posts) and 143.2K on Facebook (355 posts). Instagram emerged as the most effective amplifier of AI-generated hate despite hosting fewer posts than the other platforms.

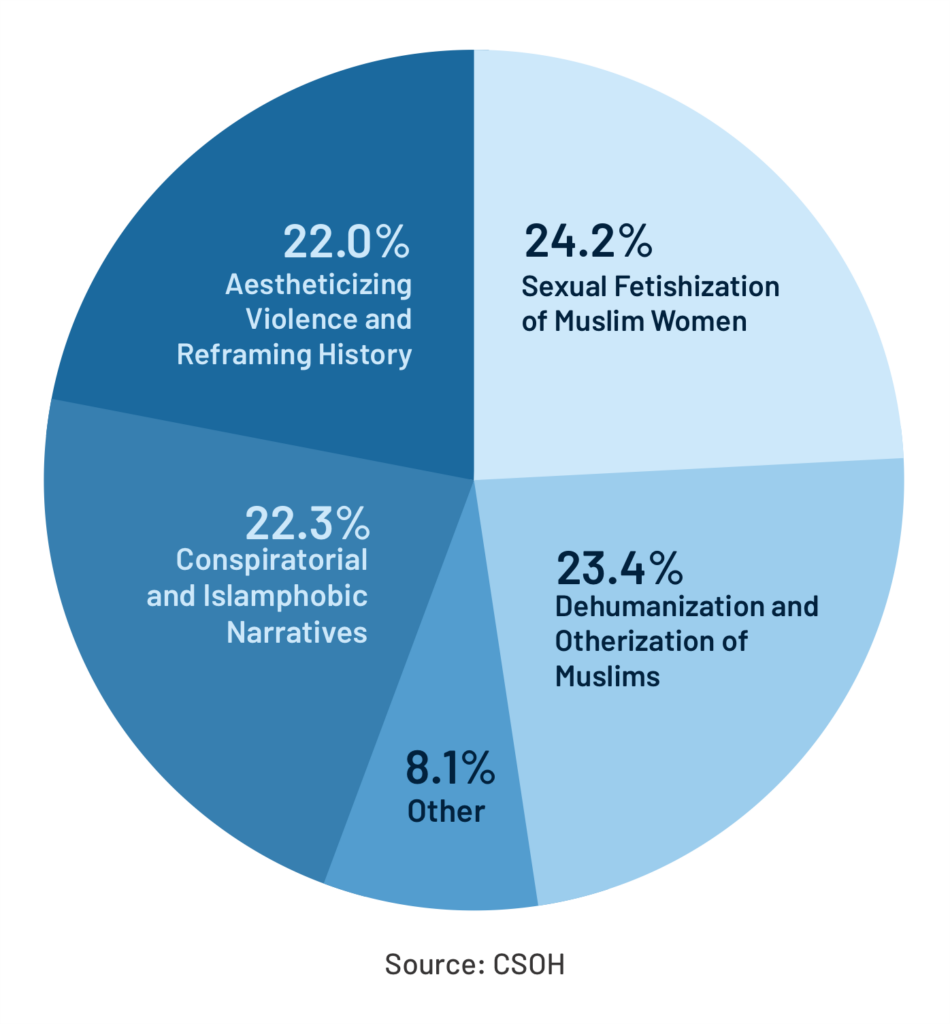

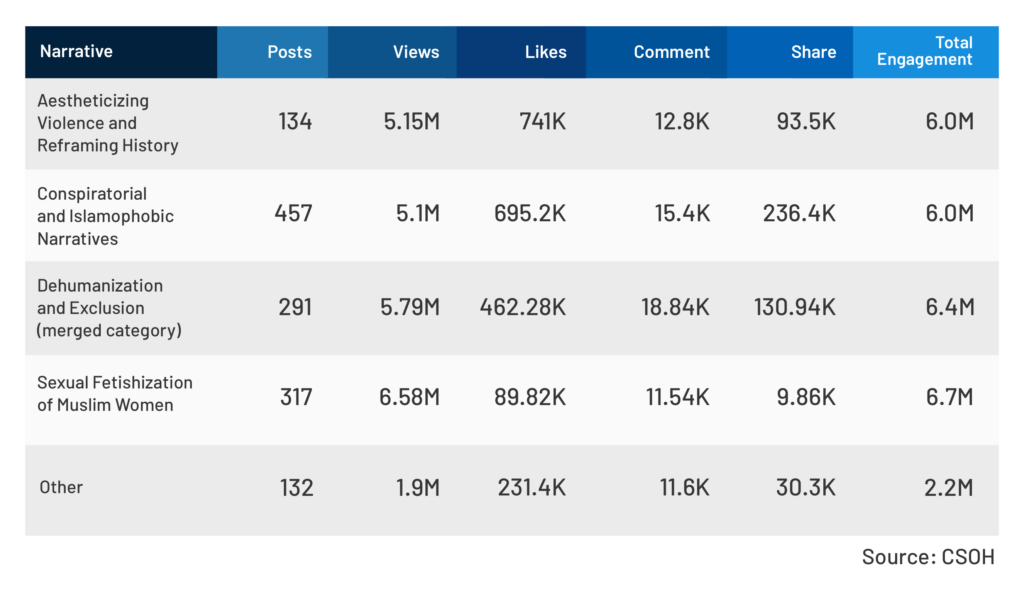

- The AI-generated hateful content clustered around four dominant themes: the sexualization of Muslim women, exclusionary and dehumanizing rhetoric, conspiratorial narratives, and the aestheticization of violence.

- The category of sexualized depictions of Muslim women received the highest engagement (6.7M interactions), revealing the gendered character of much Islamophobic propaganda, which fuses misogyny with anti-Muslim hate.

- Conspiracy theories such as ‘Love Jihad,’ ‘Population Jihad,’ and ‘Rail Jihad’ were widely reinforced through AI-generated imagery, framing Muslims as a perpetual threat to Hindu society and national security.

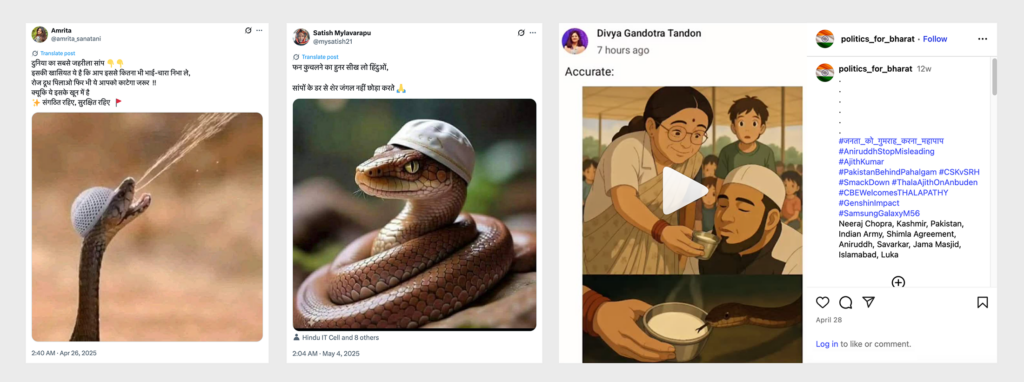

- AI-generated images depicted Muslims as snakes wearing skullcaps, a dehumanizing metaphor that framed them as deceptive, dangerous, and deserving of elimination.

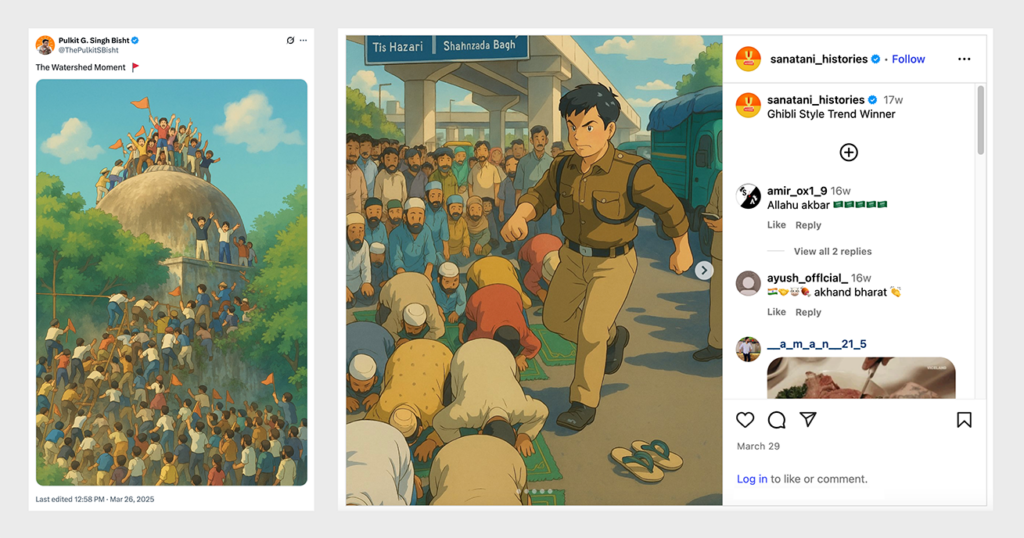

- Stylized and animated AI aesthetics (including Studio Ghibli–style imagery) made violent, hateful content appear palatable, even humorous, broadening its reach among younger audiences.

- Hindu nationalist media outlets, notably OpIndia, Sudarshan News, and Panchjanya, played a central role in producing and amplifying synthetic hate, embedding AI-generated Islamophobia into mainstream discourse.

- Across X, Facebook, and Instagram, 187 posts were reported for violating community guidelines. Only one of the flagged posts on X was removed at the time of writing this report.

Data Analysis

The report analyzed 1,326 posts from 297 accounts, including 146 accounts on X (formerly Twitter), 92 accounts on Instagram, and 59 accounts on Facebook, related to AI-generated anti-Muslim hate imagery and narratives. Of these accounts, 86 were verified. The 1,326 posts were selected because they (1) used AI-generated visuals and (2) contained explicit hateful references to Muslim identity, based on the UN definition employed in the report.

These posts received a total engagement of 27.3 million across X, Instagram, and Facebook. Of the 1,326 posts analyzed, 509 were from X, 462 from Instagram, and 355 from Facebook, accounting for 24.9 million engagements on X, 2.32 million on Instagram, and 152,100 on Facebook.

Engagement was calculated by summing up the available interactions on each platform.

- On X, this included views, likes, reposts, and comments;

- On Facebook, this included likes, shares, and comments;

- On Instagram, this included likes and comments; shares were included where available.

Total recorded engagement across the verified corpus was 27.3 million, comprising platform-reported views, likes, shares, and comments.

Platform Breakdown

- The posts in our dataset drew 24.5 million views, 2.2 million likes, 70.1k comments, and 501.1k shares across X, Instagram, and Facebook.

- These posts drew 24.9 million total engagements on X, 2.32 million on Instagram, and 152.1k on Facebook, with X accounting for the overwhelming share.

- Across platforms, X received 24.1 million views, 564.4k likes, 29.2k comments, and 179.8k shares; Instagram saw 1.53 million likes, 24.8k comments, and 313.1k shares; while Facebook had 119k likes, 16k comments, and 8.2k shares.

Main Themes

Each post was manually reviewed and assigned a primary thematic tag based on its visual or textual content. Four main trends emerged, with some overlap, that captured the majority of posts analyzed:

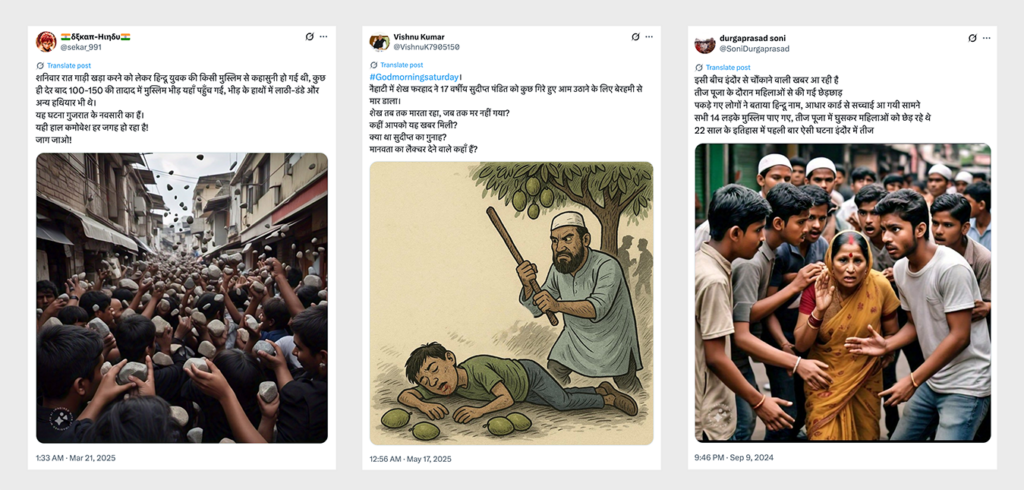

1. Conspiratorial Islamophobic Narratives: These narratives broadly suggest that Muslims as a community are engaged in conspiracies to undermine the national integrity or security of India. In posts, conspiracy theories about Muslims were frequently yoked to claims about Muslim sexual mores or the natural disposition of the community towards violence.

2. Exclusionary and Dehumanizing Rhetoric: This category consists of images that suggested or encouraged violence against Muslims. Images emphasize the dehumanization of Muslims through a number of tropes, including the use of animal imagery to describe the community, the ascription of Muslim hatred directed at non-Muslims, and the attribution of a desire on the part of Muslims to sabotage Indian property and undermine national interests.

3. Sexualization of Muslim Women: This category of images represented Muslim women in a sexualized manner, dehumanizing and rendering them as legitimate objects of sexual violence.

4. Aestheticization of Violent Imagery: This category of images used AI to link historical, conventional, or everyday images of Muslims with incidents of sectarian anti-Muslim violence, often with the effect of normalizing such violence.

5. Other: A number of posts that targeted Muslims using AI-generated imagery without any tentative thematic connections to the above-described categories. These images were classified as ‘other.’

While the timeline of the study spans two years, most AI-generated harmful posts were concentrated within a shorter period, particularly from mid-2024 onward. Activity was minimal through 2023 and the first half of 2024, but began rising sharply in June 2024, with visible spikes in September 2024 and March 2025. The September spike coincided with the circulation of content around the ‘Rail Jihad’ conspiracy theory, while the March surge reflected the popularity of the Ghibli Art trend in AI-generated hateful imagery.

This pattern shows that the use of AI imagery to promote Islamophobic narratives only gained traction in 2024, marking a turning point in both volume and sophistication of content within the Hindu nationalist ecosystem. A key driver of this shift was the growing popularity and wider availability of advanced text-to-image tools, which made producing visuals easier. OpenAI’s integration of DALL·E 3 into ChatGPT in September 2023 likely accelerated the adoption of such tools, lowering barriers to use and fueling their incorporation into propaganda at scale.

Recommendations

The findings of this report make it clear that AI text-to-image technology has been weaponized within India’s volatile digital ecosystem for the production and circulation of harmful content targeting Muslims. In light of the patterns identified in this report, a multi-pronged response is merited. In particular, we emphasize that nearly all stakeholders in the digital ecosystem, including platforms, civil society organisations, and lawmakers, must take a proactive role in addressing the harms.

- Targeted legal provisions for AI-generated content: India’s existing legal framework, specifically the Information Technology Act, 2000, is not adequately equipped to address the unique challenges of Generative Artificial Intelligence (GAI), specifically text-to-image and video tools. The Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 expanded intermediary responsibilities and introduced due diligence requirements for content removal, but do not provide a framework for identifying or regulating the production of AI-generated images and videos through GAI technology. The UNESCO Guidelines for the Governance of Digital Platforms, 2023 provides a basic outline of a rights-compatible approach that can be followed. Clear and auditable transparency duties must be placed directly on model providers, such as requiring comprehensive model and risk documentation. Specified high risk uses such as Non-Consensual Intimate Images (NCII) and biometric imagery can be subjected to greater disclosure requirements involving social media platforms.

- Specifying adjudicatory authorities: The proposed regulation can also specify adjudicatory authorities that prevent the shift of proactive detection responsibilities to intermediaries. These could draw from the out-of-court dispute settlement process offered by Article 21 of the EU DSA. This would allow both user rights to the freedom of expression be preserved and platforms ability to enforce community standards.

- Developer safety and transparency: AI developers and model hosts must share responsibilities in ensuring transparency regarding AI-generated imagery. In addition to clearly communicating that hateful use of such imagery is prohibited, developers should implement and continually refine strong detection, reporting, and moderation systems to identify misuse in real time.

- Provenance-first defaults and abuse prevention in AI tools: AI developers and model hosts must build in safety and transparency by default. This means rather than react to the violations of user guidelines when reported, platforms should actively develop their features in a manner that it is difficult to abuse them. Every image output should carry embedded provenance metadata, ensuring that synthetic content can be reliably traced back to its source.

- Standards for AI use in media: Media responsibility is a crucial pillar of maintaining a reliable information environment. India’s news media regulatory bodies, including the News Broadcasting & Digital Standards Authority (NBDSA), should expand their jurisdiction to explicitly cover AI-generated images and videos used in news content. Similarly, the Press Council of India should issue guidelines requiring clear disclosure whenever AI-generated images appear in news media and develop voluntary codes of practice to govern the ethical use of AI-generated content.

- Establishing open-source research databases: National regulatory authorities and social media companies must work to establish a coordinated civil society consortium enabling digital rights NGOs, fact‑checking collectives, and academic labs to act collectively as an independent watchdog about AI‑generated hate imagery. The consortium could build and maintain open, regularly updated datasets of synthetic hate images for research and model‑testing.

- Algorithmic transparency and independent audits: Tech platforms must assess and disclose how recommendation and ranking systems amplify AI-generated hateful content. Moreover, they should enlist the support of independent auditors to test amplification, downranking efficacy, repeat-offender handling, and cross-language performance, with public summaries and corrective action plans. Furthermore, platforms should publish standardized risk metrics, such as time-to-label and take down, recommendation rates for detected synthetic hate, and recidivism, disaggregated by language and state.

- Cross-platform early warning and joint response: Platforms should convene a privacy-preserving consortium of platforms, fact-checkers, and civil society organizations to detect and disrupt synthetic hate campaigns. Participants should exchange hashes, provenance signals, and campaign indicators to enable synchronized throttling, labeling, and lawful content takedowns.

- Friction for viral synthetic hate: Platforms should introduce graduated “circuit breakers” protocols to thwart the spread of harmful synthetic content. Platforms must consider stricter rate limits, demonetization, and link-out suppression to repeat offender accounts, with delivery reinstated only after verified remediation.

Download the full report here