Introduction

On June 24, 2025, New York assembly member Zohran Mamdani won the Democratic party nomination for Mayor of New York City, a result that drew instant national attention because it challenged both demographic and ideological assumptions about New York City politics. Within hours, public posts portraying Mamdani as an existential threat to the city began circulating on social media, spanning X, TikTok, Telegram, Meta, and other smaller platforms.

Several overlapping themes dominated the discourse, including Islamophobia, that targeted Muslim Americans, particularly Muslim New Yorkers; a framing of Mamdani’s Muslim faith and Islam in general as inherently incompatible with public office; cold war style “red-baiting,” relabeling Mamdani’s democratic-socialist platform as communist infiltration; and nativist attacks questioning Mamdani’s right to be in the U.S. A fourth, transnational, layer driven by Hindu nationalist Indian and diaspora accounts framed him as “anti-Hindu” and “anti-India.” We collectively deem these posts as harmful discourse, including but not limited to posts that pointedly express anti-Muslim hate directed at Mamdani as an individual or the specific communities to which Mamdani is perceived to belong.

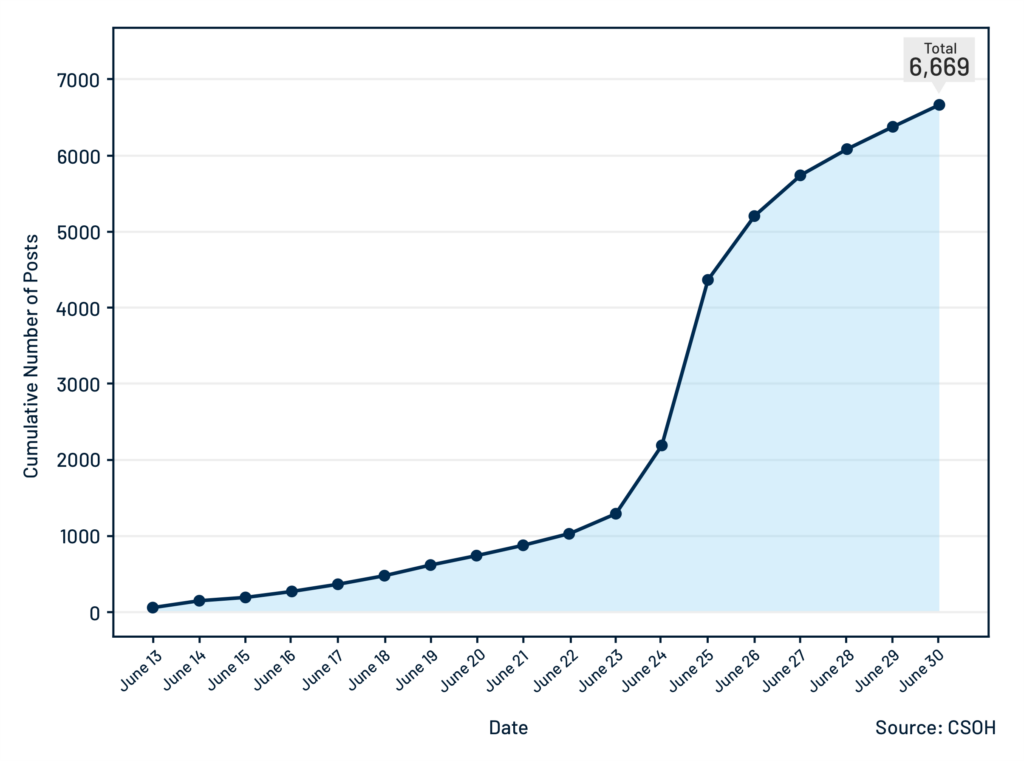

To trace how these harmful narratives gained momentum as well as the range of reactions that they elicited, we used a multi-stage search process. We first used a broad conceptual frame of anti-Muslim sentiment and Islamophobia to develop a set of keywords for searching public posts published between June 13 and June 30 on X, TikTok, Telegram, Meta, and other smaller platforms. This initial keyword search yielded 6,669 posts. These posts included both harmful narratives and themes, as well as counter-speech—challenges and opposition to expressions of anti-Mamdani hate and Islamophobia.

We then focused on the time window when posting activity related to the primary peak, specifically from June 23 through June 27. We ran a second round of analysis on the corpus of 6,696 posts based on a narrower, more focused keyword search of terms. During this round of analysis, we specifically targeted channels known to promote hate. The objective of the second round of analysis was to identify high-volume, high-velocity posts, i.e., posts that drew significant and sustained engagement and had wide reach. This process yielded a narrower subset and a smaller corpus of 1,933 posts. As with the broader corpus of 6,669 posts, the subset of 1,933 consisted of both posts that expressed hate and harmful sentiments (about 56% of the 1,933 posts) and posts that were supportive, neutral, or constituted counter-speech challenging hate and harmful sentiments (approximately 44%).

We next thematically categorized and coded each of the 1,933 posts, which allowed us to examine the key narratives that gained traction around the primary date of June 24. Using quantitative keyword analysis in combination with qualitative thematic coding, we documented how different themes expressing harmful sentiments (which we term ‘hate frames’) combined with or fused together and how quickly they spread. We also considered what the cumulative impact and spread of such sentiment implies for the 2025 general mayoral election in New York City. Our goal is to quantify the scale and mechanics of harmful digital speech and the digital counter-speech that emerged in response to harmful speech and offer actionable steps for platforms and civil society groups so that similar surges do not escalate into real-world harm before November 2025.

Key Findings

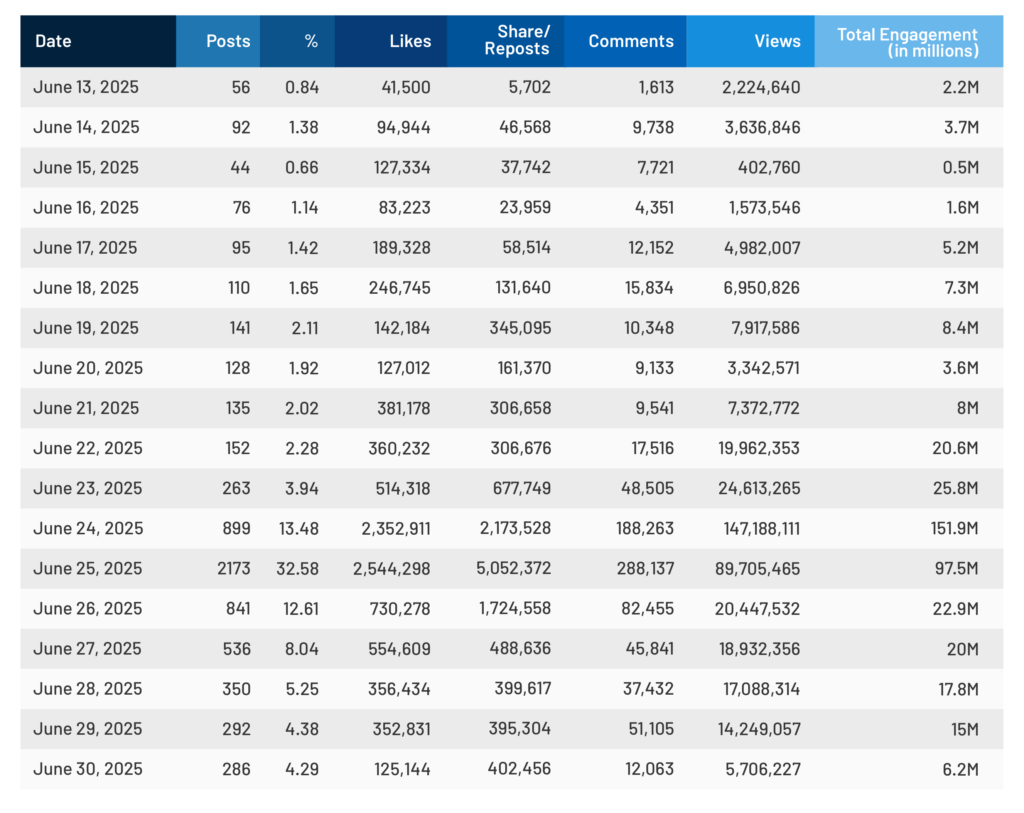

- High volume and engagement: Across the observation period, we collected 6,669 posts mentioning Zohran Mamdani and related themes. These posts generated substantial reach, totaling 419.2 million engagements which combined views, likes, shares/reposts, and comments. Activity was especially pronounced on June 24 and 25, which together accounted for 3,072 posts with 249.4 million engagements. Overall, this volume underscores the significant attention the topic received during the seventeen-day period.

- Primary-day flash surge: From June 13 to June 23, 2025, traffic about Zohran Mamdani that met our keyword search criteria held between 56 and 264 hateful posts per day. On the day of the primary on June 24, 2025, the volume jumped to 899 keyword-matched posts. On June 25, 2,173 keyword-matched posts were published. This timeline illustrated a classic flash-mobilization pattern tied to an electoral milestone. A subset of 1,933 posts received closer scrutiny because they contained more pointed hate-related keywords or came from accounts with a track record of hateful content.

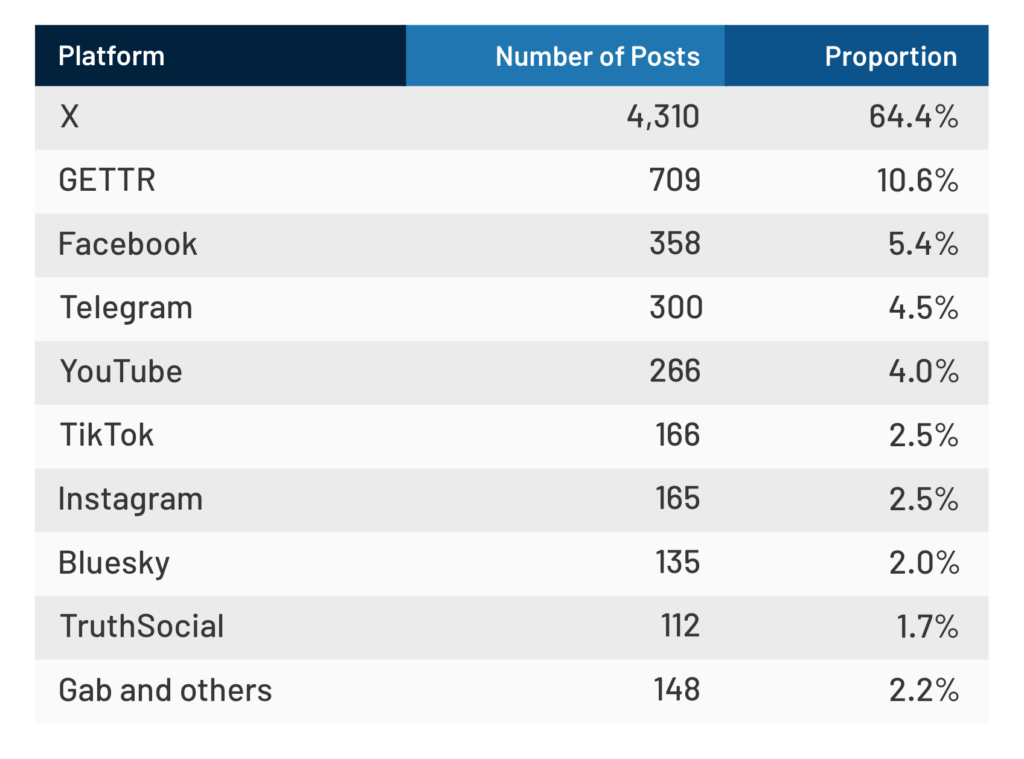

- Platform concentration and X’s dominance: Posts on X (formerly Twitter) comprised 4,310 of the 6,669 collected posts, representing 64.6% of the dataset. Beyond this primary platform, the numbers fall sharply. The next closest platform, GETTR, accounts for 709 posts or 10.6% of the dataset. The remaining thirteen platforms supply only 1,650 posts, 24.7% in total, with no single service exceeding 6% of the dataset. This sharp taper shows that X drives the conversation while other platforms capture only modest and fragmented portions of the narrative.

- Islamophobia anchors the narrative: Explicit anti-Muslim language–either targeting Mamdani, Muslims, or Islam more broadly within the context of mayoral primary results–was found in 39.4% of the 1,933 posts. These posts frame Islam itself, not any policy detail, as a public threat. The frequency confirms that Mamdani’s Muslim identity is a primary vector for delegitimization and for broader claims that Islam is at odds with American civic discourse.

- Fusion of religion and ideology magnifies reach: Among the 39.4% of posts flagged as Islamophobic, 51.2% also used an “ideological demonization” frame, clubbing faith with ideology in portraying Mamdani’s politics as inherently dangerous. Similarly, 62.3% of posts that attacked his political ideology contained Islamophobic language, underscoring how closely faith-based and ideological attacks overlap. Phrases such as “Islamist socialism taking New York City” unify audiences worried about religion with those anxious about left-wing politics. Posts reflecting this fusion average 406,244.5 total interactions (views, likes, shares, and comments) per post, indicating that the rhetoric of blended fear travels farther than single-issue hate.

- Nativist calls for removal of Mamdani: A smaller but salient subset of 227 posts comprising 14.3% of the reviewed dataset depicts Mamdani as illegitimate because of his immigrant background and his specific history of immigration and naturalization. The subset advises remedies that range from deportation to citizenship revocation. Though numerically limited, these messages move beyond criticism into concrete exclusionary proposals.

- Hindu nationalist amplification: The analyzed dataset contains 65 unique posts that brand Mamdani “anti-Hindu.” This message spans both US-based far-right accounts and accounts based in South Asia, showing how a local New York race becomes fuel for a globalized grievance network.

Recommendations for Social Media Platforms

- Strengthen platform safeguards during election periods: All major social media platforms should activate election-specific escalation protocols that fast-track review of harmful content. Posts that trigger one or more identified harmful categories should face algorithmic down-ranking or temporary share limits.

- Shared hash-matching for known hate content: Major platforms should join or expand the scope of hash-sharing consortia they already govern, such as the Global Internet Forum to Counter Terrorism’s (GIFCT) Terrorist Content Database, by formally adding Islamophobia and harmful speech under the same technical framework and governance rules. This will ensure that any image, video, or URL already flagged as Islamophobic or otherwise harmful is blocked, down-ranked, or warning-labeled the moment a user tries to repost it on a partner service. This cooperative approach limits the cross-platform spread of recycled memes.

- Repeat-offender friction: Accounts responsible for multiple violations in quick succession should face graduated friction. For example, first, a mandatory “read before you share” interstitial, then a 24-hour freeze on reposts and replies, and finally, suspension. This tiered approach preserves lawful speech while slowing the velocity of serial offenders.

- Virality checkpoints with context cards: When a post containing high-risk keywords (e.g., “jihadi” or “Sharia takeover”) crosses a predefined engagement threshold, platforms should automatically insert an interstitial context card. The card could provide concise background facts, links to authoritative sources, and an option to “share anyway.” The added friction is designed to curb impulsive amplification and would ensure users see corrective information before spreading harmful claims.

- Extend Community Notes to shared claims: Community Notes are a primary, crowd-sourced moderation tool on X, TikTok (Footnotes), Youtube (Information Panels), and Meta, where the feature is currently being tested. When a Community Note is rated helpful, platforms should treat that Note as the canonical correction for the underlying claim and automatically attach it to new and existing posts that repeat the same assertion, identified through exact-match and semantic clustering. This claim-level propagation would scale fact-checking instantly across thousands of duplicates, ensuring consistent context wherever the narrative appears.

- Boost corrective counter-speech: Ranking algorithms should give a modest relevance lift to posts that already contain credible rebuttals, fact checks, or helpful Community Notes, activating when the platform detects a spike in hateful narratives. Foregrounding these context-rich posts while leaving uncontextualized shares neutral or even slightly down-ranked would expose users to balanced information at a moment when false or hateful claims gain traction, reducing its persuasive power without suppressing lawful speech.

- Real-time transparency dashboard: For all users, platforms should publish near-real-time dashboards showing the number of harmful content reports received and median response times. Platforms may also encourage transparent communication with community groups and organizations. An open-source flagging portal can be developed for community organizations where all users can identify flagged content as well as who flagged the content and on what grounds.

Download the full report here.